Intel Optane Cluster

Intel Ice Lake Optane Nodes

In order to support users who need lots of memory for workloads in a single shared memory system, we offer our Intel Ice Lake Optane nodes. These nodes comprise

- 2x Intel(R) Xeon(R) Gold 6338 CPUs with 64 physical cores in total (no hyper-threading)

- 512 GB of DRAM (DDR4-3200)

- 2 TB of Non-Volatile Memory (NVM) in form of Intel Optane DC Persistent Memory DIMMs

Although NVM exhibits higher latency and lower bandwidth compared to DRAM, it has a higher capacity and is still much faster than accessing SSDs.

System Configuration

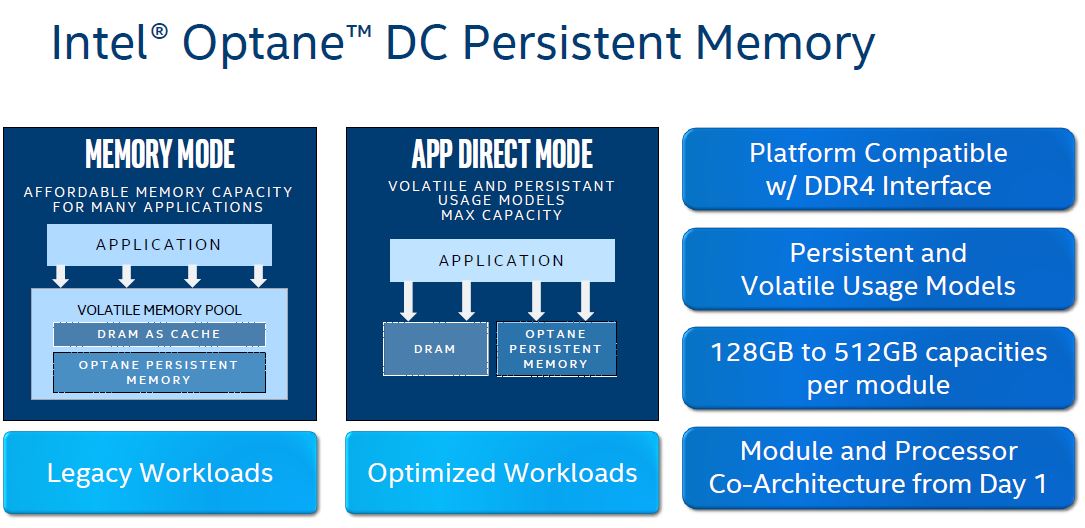

Systems with Intel Optane DC Persistent Memory can in theory be configured in the following ways that is also illustrated in Figure 1.

- Memory Mode (also called 2LM): Here, the faster DRAM is used as an additional hardware-managed cache in front of the larger NVM. Users do not need to care about any data management explicitly but can simply use the systems like any other system with regular DRAM.

- App Direct Mode (also called 1LM): Here, DRAM and Optane are spearately addressible. In order to use NVM the user needs to explicitly take care of data allocation with e.g. memkind or manual low level API calls.

Source: Intel Corp.

At RWTH Aachen University, the Optane systems are configured in Memory Mode to provide transparent access to high capacity memory and at the same time profit from fast DRAM as additional cache level. At the moment we have four nodes publically available.

Example output for the NUMA configuration:

$ numactl -H available: 2 nodes (0-1) node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 node 0 size: 1015123 MB node 0 free: 1010840 MB node 1 cpus: 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 node 1 size: 1016053 MB node 1 free: 1007228 MB node distances: node 0 1 0: 10 20 1: 20 10Access

Please contact servicedesk@itc.rwth-aachen.de in order to get access to the system. Note: These are special purpose system for users with high demand for huge shared memory workloads. Please justifiy your requirements briefly.

Slurm

Add the following to your Slurm submission script:

#SBATCH --partition=optane