JupyterLab and Slurm

The JupyterLab web interfaces run in the HPC Compute cluster as Slurm jobs.

A user selects a default JupyterLab Profile (configuration of cores, runtime, etc.), customizes and sends it to be queued in the Slurm batch system.

This functions like any other Slurm job, with the advantage that it is all done through a webpage.

The Slurm settings used for granting hardware to JupyterLab can be configured according to the ‘sbatch’ documentation of the installed Slurm version.

These settings behave identically to sbatch batch job settings used in normal scripts.

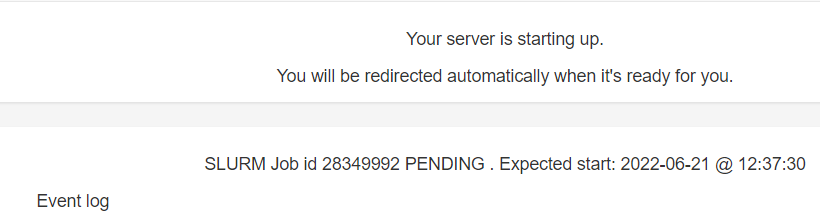

Waiting in queue

Queuing/waiting is inherent to HPC hardware and HPC workflow, but we try to allocate JupyterLab instances as fast as possible.

To quickly get access to a JupyterLab instance users must request 8 or less cores.

Because the HPC JupyterHub uses Slurm to allocate HPC hardware, users that require GPUs or above 8 cores, need to wait for their request to be processed in the Slurm queue.

Once the user selects a Profile and starts it, it has waits for the hardware resources to become available from Slurm.

Users receive an estimated start time for convenience.

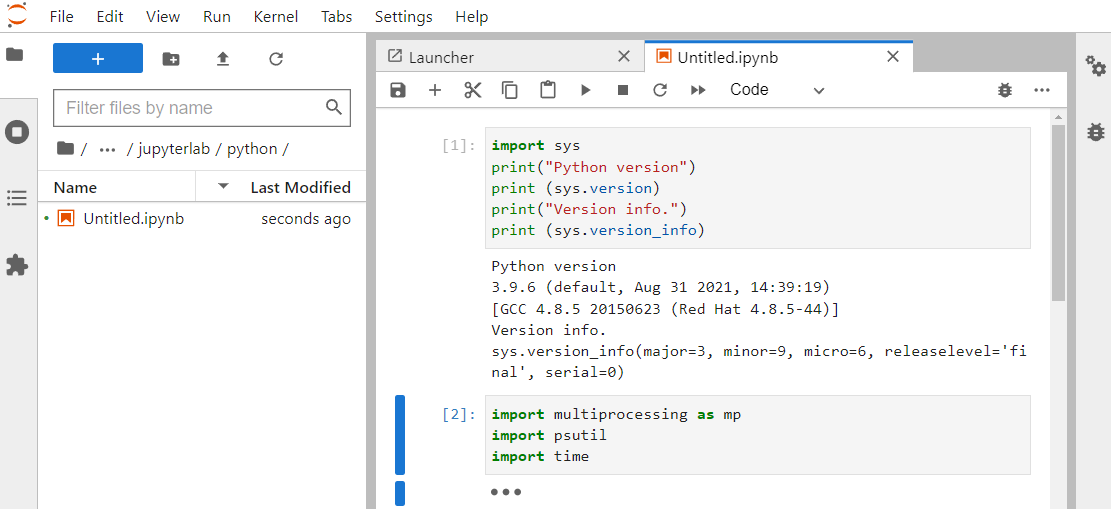

Once the hardware is granted, the JupyterLab containing Jupyter Notebooks is started and the user is connected to the instance through the web interface.

Runtime for JupyterLab

The user can then work using this instance of JupyterLab instance that is running on a remote HPC node until the runtime of the Slurm job ends.

This runtime must be defined before submission and cannot be changed while the JupyterLab Slurm job is running.

Enough time should be chosen so that the interactive session can be completed before the deadline.