File Systems Overview

Each HPC user and computing time project, is allocated storage space on the HPC cluster to facilitate their research and computational tasks. The additional storage that is offered with computing time projects is especially usefull when working collaboratively. The cluster offers various file systems, each with its unique advantages and limitations. This page provides an overview of these storage options.

Key Points:

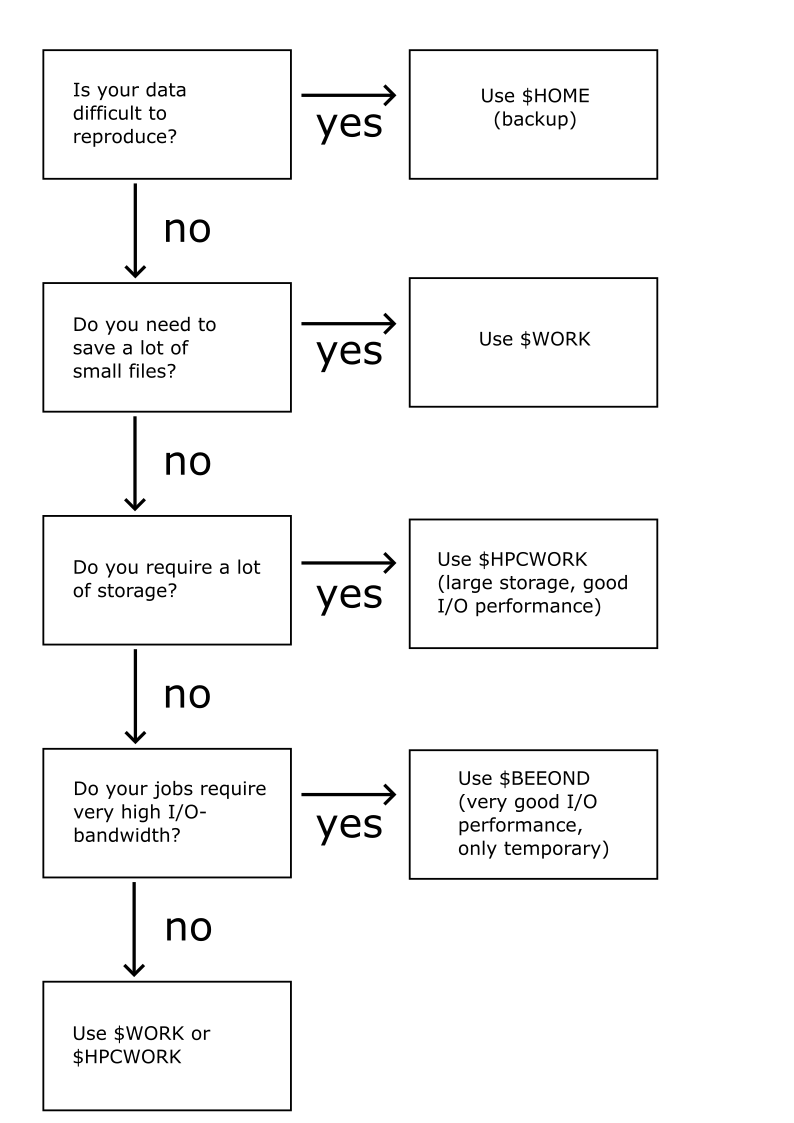

- The appropriate file system for any workflow depends on the task at hand. Understanding the differences between the file systems described on this page helps with this evaluation. In addition, the decision tree below may also help in the decision process.

- Users are primarily responsible for backing up their data. The HPC cluster is not intended as a long-term data storage solution. It is crucial to regularly backup data to avoid any potential loss.

- We do not offer increases in storage quotas for individual users. We do, however, for computing time projects.

The three permanent file systems are: $HOME, $WORK, and $HPCWORK. To navigate to your personal storage space, use the cd command followed by the file systems’ name. For example, cd $WORK will take you to your storage space on $WORK. For computing time projects, follow instructions here.

Table of Contents

In the following table you can see a summary of all and additional details of the available file systems discussed before.

| File System | Type | Path | Persistence | Snapshots | Backup | Quota (space) | Quota (#files) | Use Cases |

|---|---|---|---|---|---|---|---|---|

| $HOME | NFS/CIFS | /home/<username> | permanent | $HOME_SNAPSHOT | yes | 150 GB | - | Source code, configuration files, important results |

| $WORK | NFS/CIFS | /work/<username> | permanent | $WORK_SNAPSHOT | no | 250 GB | - | Many small working files |

| $HPCWORK | Lustre | /hpcwork/<username> | permanent | - | no | 1000 GB | 1 million | I/O intensive computing jobs large files |

| $BEEOND | BeeOND | stored in $BEEOND | temporary | - | no | limited by the sum of sizes of local disks | - | IO intensive computing jobs, many small working files, any kind of scratch data |

$HOME

Upon logging into the HPC Cluster, users are directed to their personal home-directory within $HOME. As a Network File System (NFS) with an integrated backup solution, this file system is particularly well-suited for the storage of important results that are challenging to reproduce, as well as for the development of code projects. However, $HOME is less suitable for running large-scale computing jobs due to its limited space of 150 GB. In addition, frequent and massive file transfers, creation, and deletion can put significant strain on the backup system.

$WORK

$WORK shares some similarities with $HOME, as it is also operated on an NFS. However, the key difference is that $WORK has no backup solution. This absence of backups allows for a more generous storage quota of 250 GB.

$WORK is particularly suitable for computing jobs that are not heavily dependent on I/O performance and that generate numerous small files.

$HPCWORK

The $HPCWORK file system is based on Lustre, which allows for larger storage space and improved I/O-performance compared to $HOME and $WORK. Each user and computing time project are granted a default quota of 1000 GB on this file system. In addition, the system can handle extremely large files and fast parallel access to them.

These benefits are possible, in part because the metadata of files is stored in metadata (MD) databases and handled by specialized servers. However, each file, regardless of its size, occupies a similar amount of space in the MD database. To maintain a managable amount of MD database entries for each user, there is also a quota on the number of files on $HPCWORK. The default file quota is set to 1 million files.

Mind that $HPCWORK also provides no backup solution.

$BEEOND

For computing jobs with high I/O performance demands, users can leverage the internal SSDs of the nodes. The BeeOND (BeeGFS on Demand) temporary file system enables users to utilize the SSD storage across all requested nodes as a single, parallel file system within a single namespace.

Key Considerations when using BeeOND:

- The amount of allocated storage depends on the type and number of requested nodes (for more information click here).

- Computing jobs that will become exclusive.

- Within the job, the file system path for BeeOND is accessible via the environment variable

$BEEOND. - The storage space on the filesystem is strictly temporary! All files will be automatically deleted after the computing jobs concludes.

This example job script shows how to use BeeOND:

#!/usr/bin/zsh

### Request BeeOND

#SBATCH --beeond

### Specify other Slurm commands

### Copy Files to Beeond

cp -r $WORK/yourfiles $BEEOND

### Navigate to Beeond

cd $BEEOND/yourfiles

### Perform your job

echo "hello world" > result

### Afterwards copy results back to your partition

cp -r $BEEOND/yourfiles/result $WORK/yourfiles/

If you are unsure which file system to use for your computing jobs, the following decision tree may help: